import numpy as np

9.4. Linear Transformations#

9.4.1. Subspaces of \(\mathbb{R}^n\)#

This section focuses on subsets of \(\mathbb{R}^n\) that preserve \(\mathbb{R}^n\)’s linear structure. We will see that any matrix \(m\times n\) matrix \(A\) admits two important subspaces that contain useful information about the solution set of \(A\vec{x} = \vec{b}\) for some \(\vec{b}\in \mathbb{R}^m\)

Column space and null space#

A subspace of \(\mathbb{R}^n\) is a subset \(H \subset \mathbb{R}^n\) that is closed under addition and scalar multiplication in the following sense:

\(\vec{u} + \vec{v} \in H \quad \forall \vec{u}, \vec{v} \in H\).

\(r\vec{u} \in H \quad \forall \vec{u} \in H\) and \(\forall r \in \mathbb{R}\).

Note that we can choose \(c = 0\), which implies \(\vec{0}\) is an element of any subspace. The smallest subspaces of \(\mathbb{R}^n\) is \(H = \{\vec{0}\}\) and the largest possible subspace of \(\mathbb{R}^n\) is itself. Other subspaces of \(\mathbb{R}^n\) are lines, planes, and their \(n\)-dimensional counterparts (\(n\geq 3\)) that pass through the origin.

Example 1

Let \(\vec{u}, \vec{v} \in \mathbb{R}^2\). Show that \(H := \text{span}(\vec{u}, \vec{v})\) is a subspace of \(\mathbb{R}^2\).

Solution:

Recall that the span of a set of vectors is the set of all possible linear combinations of those vectors. Let \(\vec{x}, \vec{y} \in \text{span}(\vec{u}, \vec{v})\) and \(r \in \mathbb{R}\). There exist \(c_1, c_2, d_1, d_2 \in \mathbb{R}\) such that \(\vec{x} = c_1 \vec{u} + c_2 \vec{v}\) and \(\vec{y} = d_1 \vec{u} + d_2 \vec{v}\). We have:

Both of these vectors are in \(H\).

In general, if \(\vec{u}_1, \vec{u}_2, \dots, \vec{u}_p \in \mathbb{R}^n\), then the span of of \(\vec{u}_1, \vec{u}_2, \dots, \vec{u}_p\), is a subspace \(H\subset \mathbb{R}^n\), and we say H is spanned (or generated) by \(\vec{u}_1, \vec{u}_2, \dots, \vec{u}_p\).

Any \(m \times n\) matrix \(A\) is associated with important subspaces:

The column space of \(A\), denoted by \(\text{col}(A)\), is the span of the columns of \(A\):

where \(\vec{v_i}\)s are the columns of \(A\) for \(i = 1,2,\dots, n\). By definition, \(col (A)\) is a subspace of \(\mathbb{R}^m\).

The null space \(A\), denoted by \(null (A)\), is the solution set of the homogeneous equation \(A\vec{x} = \vec{0}\):

By Theorem 2 in subsection 1.2, \(\text{null}(A)\) is a subspace of \(\mathbb{R}^n\).

Example 2

Let \(A = \begin{bmatrix} 1 & -1 \\ 2 & 1 \\ -1 & 3 \\ \end{bmatrix}\).

Find \(col(A)\) and \(null(A)\).

Is \(\vec{b} = \begin{bmatrix} 1 \\ 2 \\ 3 \end{bmatrix} \in \text{col}(A)\)?

Is \(\vec{u} = \begin{bmatrix} 1 \\ 1 \end{bmatrix} \in \text{null}(A)\)?

Solution:

\(col(A) = \text{span} \left\lbrace\ \begin{bmatrix} 1\\ 2\\ -1 \end{bmatrix}, \begin{bmatrix} -1\\ 1\\ 3\end{bmatrix}\ \right\rbrace \).

To find \(null(A)\), we solve the homogeneous equation \(A\vec{x} = \vec{0}\). Let’s set up the augmented matrix \([A \ | \ \vec{0}]\) and row-reduce it:

M = np.array([[1, -1, 0], [2, 1, 0], [-1, 3, 0]])

M

array([[ 1, -1, 0],

[ 2, 1, 0],

[-1, 3, 0]])

# Swap two rows

def swap(matrix, row1, row2):

copy_matrix=np.copy(matrix).astype('float64')

copy_matrix[row1,:] = matrix[row2,:]

copy_matrix[row2,:] = matrix[row1,:]

return copy_matrix

# Multiple all entries in a row by a nonzero number

def scale(matrix, row, scalar):

copy_matrix=np.copy(matrix).astype('float64')

copy_matrix[row,:] = scalar*matrix[row,:]

return copy_matrix

# Replacing a row by the sum of itself and a multiple of another

def replace(matrix, row1, row2, scalar):

copy_matrix=np.copy(matrix).astype('float64')

copy_matrix[row1] = matrix[row1]+ scalar * matrix[row2]

return copy_matrix

M1 = replace(M, 1, 0, -2)

M1

array([[ 1., -1., 0.],

[ 0., 3., 0.],

[-1., 3., 0.]])

M2 = replace(M1, 2, 0, 1)

M2

array([[ 1., -1., 0.],

[ 0., 3., 0.],

[ 0., 2., 0.]])

M3 = scale(M2, 1, 1/3)

M3

array([[ 1., -1., 0.],

[ 0., 1., 0.],

[ 0., 2., 0.]])

M4 = replace(M3, 2, 1, -2)

M4

array([[ 1., -1., 0.],

[ 0., 1., 0.],

[ 0., 0., 0.]])

Therefore,

From the row-reduced form, we can see that all columns are pivots and there is no free variable. In other words, the only solution to the system is the trivial solution: \(null(A)=0\)

\(\vec{b} \in \text{col}(A)\) if and only if \(\vec{b}\) is a linear combination of the columns of \(A\). In other words, if and only if the equation \(A\vec{x} = \vec{b}\) has a solution. Let’s set up the augmented matrix \([A \ | \ \vec{b}]\) and row-reduce it:

import numpy as np

M = np.array([[1, -1, 1], [2, 1, 2], [-1, 3, 1]])

M

array([[ 1, -1, 1],

[ 2, 1, 2],

[-1, 3, 1]])

M1 = replace(M, 1, 0, -2)

M1

array([[ 1., -1., 1.],

[ 0., 3., 0.],

[-1., 3., 1.]])

M2 = replace(M1, 2, 0, 1)

M2

array([[ 1., -1., 1.],

[ 0., 3., 0.],

[ 0., 2., 2.]])

M3 = scale(M2, 1, 1/3)

M3

array([[ 1., -1., 1.],

[ 0., 1., 0.],

[ 0., 2., 2.]])

M4 = replace(M3, 2, 1, -2)

M4

array([[ 1., -1., 1.],

[ 0., 1., 0.],

[ 0., 0., 2.]])

Clearly, the last two rows show that the system is inconsistent. Thus, \(\vec{b}\) is not in \(\text{col}(A)\).

No, \(null(A)\) is trivial. We can verify this directly:

\(\vec{u} \in \text{null}(A)\) if and only if \(A\vec{u} = \vec{0}\):

A = np.array([[1, -1], [2, 1], [-1, 3]])

A

array([[ 1, -1],

[ 2, 1],

[-1, 3]])

u = np.array([[1],[1]])

u

array([[1],

[1]])

#computing Au

Au = A @ u

Au

array([[0],

[3],

[2]])

Thus, \(\vec{u}\) is not in \(null(A)\).

Example 3

Suppose \(A\) is an invertible \(n\times n\) matrix. Then, by the Invertible Matrix Theorem (Theorem 4 in section 2.1), we have the following:

\(col(A) = \mathbb{R}^n\)

\(null(A) = \{0\}\)

Basis, Dimension, and Rank#

Let \(H \subset \mathbb{R}^n\) be a subspace. A basis for \(H\) is a linearly independent set in \(H\) that spans \(H\).

According to the Invertible Matrix Theorem (Theorem 4 in section 2.1), the columns of an invertible \(n\times n\) matrix form a linearly independent set that spans \(\mathbb{R}^n\). Thus, the column space of any invertible matrix is a basis for \(\mathbb{R}^n\). It’s important to note that bases are not unique.

Example 4 (The Standard Basis)

Let

be the identity matrix. The columns of \(I_n\), denoted by

form a basis for \(\mathbb{R}^n\), which is called the standard basis of \(\mathbb{R}^n\). For instance, the standard basis elements for \(\mathbb{R}^3\) are

The following cell computes the standard basis of \(\mathbb{R}^4\)

#the 4x4 identity matrix

I = np.eye(4)

print(' e1 = \n', np.array([I[0]]).T, '\n')

print(' e2 = \n', np.array([I[1]]).T, '\n')

print(' e3 = \n', np.array([I[2]]).T, '\n')

print(' e4 = \n', np.array([I[3]]).T, '\n')

e1 =

[[1.]

[0.]

[0.]

[0.]]

e2 =

[[0.]

[1.]

[0.]

[0.]]

e3 =

[[0.]

[0.]

[1.]

[0.]]

e4 =

[[0.]

[0.]

[0.]

[1.]]

A basis for col A

The column space of a matrix \(A\) is generated by the set of all columns of \(A\). If \(A\) is invertible, by the invertible matrix theorem, the columns are all pivot columns and form a linearly independent set and therefore, a basis for col \(A\). In general, we have:

Theorem 13

The pivot columns of a matrix \(A\) form a basis for \(col(A)\).

Example 5

Suppose

Find a basis for \(col(A)\).

Find a basis for \(null(A).\)

Solution

We reduce \(A\) to its reduced row echelon form (RREF) to find its pivot columns:

A = np.array([[1,3,3,2,-9], [-2,-2,2,-8,2], [2,3,0,7,1], [3,4,-1,11,8]])

A

array([[ 1, 3, 3, 2, -9],

[-2, -2, 2, -8, 2],

[ 2, 3, 0, 7, 1],

[ 3, 4, -1, 11, 8]])

A1 = replace(A, 1, 0, 2)

A2 = replace(A1, 2, 0, -2)

A3 = replace(A2, 3, 0, -3)

A4 = scale(A3, 1, 1/4)

A5 = replace(A4, 2, 1, 3)

A6 = replace(A5, 3, 1, 5)

A7 = scale(A6, 2, 1/7)

A8 = replace(A7, 0, 1, -3)

A9 = replace(A8, 3, 2, -15)

A10 = replace(A9, 1, 2, 4)

A11 = replace(A10, 0, 2, -3)

A11

array([[ 1., 0., -3., 5., 0.],

[ 0., 1., 2., -1., 0.],

[ 0., 0., 0., 0., 1.],

[ 0., 0., 0., 0., 0.]])

We can see from the RREF of \(A\) that \(A\) has 3 pivot columns, which are the first two columns and the last column. Therefore, a basis for \(\text{col}(A)\) is the set consisting of the first two columns and the last column of \(A\):

It’s important to note that the columns of the RREF of \(A\) are not necessarily in \(\text{col}(A)\), and we cannot use them to form a basis for \(\text{col}(A)\).

To find a basis for \(\text{null}(A)\), we solve \(A\vec{x}=0\) in the usual manner. Let’s form the augmented matrix \([A \ | \ \vec{0}]\):

A0 = np.array([[1,3,3,2,-9,0], [-2,-2,2,-8,2,0], [2,3,0,7,1,0], [3,4,-1,11,8,0]])

A1 = replace(A0, 1, 0, 2)

A2 = replace(A1, 2, 0, -2)

A3 = replace(A2, 3, 0, -3)

A4 = scale(A3, 1, 1/4)

A5 = replace(A4, 2, 1, 3)

A6 = replace(A5, 3, 1, 5)

A7 = scale(A6, 2, 1/7)

A8 = replace(A7, 0, 1, -3)

A9 = replace(A8, 3, 2, -15)

A10 = replace(A9, 1, 2, 4)

A11 = replace(A10, 0, 2, -3)

A11

array([[ 1., 0., -3., 5., 0., 0.],

[ 0., 1., 2., -1., 0., 0.],

[ 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 0., 0.]])

From this, we can see that the general solution is:

It is also clear that \(\begin{bmatrix} 3\\ -2\\ 1\\ 0\\ 0\end{bmatrix}\) and \(\begin{bmatrix} - 5 \\ 1 \\0\\ 1\\ 0\end{bmatrix}\) are linearly independent. So they form a basis for \(null(A)\).

Note that a subspace \(H\) can have different bases. For example, the following sets are both bases for \(\mathbb{R}^2\):

However, all bases have something in common:

Theorem 14

All bases of a subspace \(H\) in \(\mathbb{R}^n\) have the same number of vectors.

The dimension of a subspace \(H\), denoted as \(dim(H)\), is the number of vectors in any of its bases. Furthermore, we define \(dim(\{\vec{0}\})\) to be \(0\). The dimension of the column space of a matrix \(A\), denoted as \(rank(A)\), is referred to as the rank of \(A\).

Example 6

Show that \(dim(\mathbb{R}^n) = n.\)

Solution:

We can use Example 6, where it was shown that the columns of the identity matrix \(I_n\), denoted as \(\{\vec{e}_1, \dots, \vec{e}_n\}\), form a basis for \(\mathbb{R}^n\).

Since there are \(n\) columns in the identity matrix \(I_n\), the dimension of \(\mathbb{R}^n\) is equal to \(n\). Hence, \(dim(\mathbb{R}^n) = n\).

Example 7

Find the rank of subspaces of \(\mathbb{R}^3\).

Solution:

In \(\mathbb{R}^3\), we have the following subspaces:

The zero subspace \(\{\vec{0}\}\), which has zero dimension.

Lines passing through the origin, which have one dimension: every line in \(\mathbb{R}^3\) can be spanned by a vector.

Planes passing through the origin, which have two dimensions: every plane in \(\mathbb{R}^3\) can be spanned by two linearly independent vectors.

The entire space \(\mathbb{R}^3\), which has three dimensions.

The next Theorem states that the sum of the dimensions of the column space and null space is equal to the number of columns in the matrix. Additionally, it provides a way to determine the dimensions of the column space and null space based on the number of pivot and non-pivot columns in the matrix, respectively.

Theorem 15 (Rank-Nullity Theorem)

If \(A\) be an \(m \times n\) matrix, then \(dim(col(A)) + dim(null(A)) = n\). Moreover,

\(dim(col(A)) =\) the number of pivot columns of \(A\).

\(dim(null(A)) =\) the number of non-pivot columns of \(A\).

Example 8

Find the rank and dimension of \(null(A)\), where \(A = \begin{bmatrix} 1 & 3 &3 & 2 &-9 \\ -2 & -2 & 2 & -8 &2 \\ 2 & 3 &0 & 7 & 1 \\ 3 & 4 & -1 & 11 & 8 \end{bmatrix}\)

Solution

From the solution of Example 7, We know that the RREF of \(A\) is

A11

array([[ 1., 0., -3., 5., 0., 0.],

[ 0., 1., 2., -1., 0., 0.],

[ 0., 0., 0., 0., 1., 0.],

[ 0., 0., 0., 0., 0., 0.]])

Which has three pivot columns and two non-pivot columns. Therefore, \(rank(A) = 3\) and \(dim(null(A)) = 2\)

Exercises#

Exercises

Determine which one of the following sets are subspaces:

a.

\[\begin{split} H = \left\lbrace \ \begin{bmatrix} x \\ y \\ z \end{bmatrix} \in \mathbb{R}^3 \mbox{ with } x \geq 0, \right\rbrace. \end{split}\]b.

\[\begin{split} H = \left\lbrace \ \begin{bmatrix} x \\ 1 \\2x \end{bmatrix} \in \mathbb{R}^3 \right\rbrace. \end{split}\]Let

Find the dimension of \(col(A)\) and \(null(A)\) .

Suppose \(A\) is a \(5 \times 5\) matrix, and \(A\) has 3 pivot columns.

a. Prove that \(A\vec{x}=\vec{0}\) must have nontrivial solutions.

b. Is there an example of \(A\) where \(col(A)= null(A)\) ?

Find an explicit example of a \(4\times 4\) matrix \(A\) which has \(colA = null(A)\)

9.4.2. Introduction to Linear Transformations#

This section introduces linear transformations (sometimes referred to as linear maps) and explores their relationship with linear systems. We discuss how the existence and uniqueness of solutions to linear systems can be reformulated in terms of linear transformations.

Motivation#

In many applications, the matrix equation arises in a way that is not directly connected to linear combinations of vectors. In fact, we think of an \(m \times n\) matrix \(A\) as a function that transforms \(\vec{x}\in \mathbb{R}^m\) to \(A\vec{x}\in \mathbb{R}^n\). With this perspective, finding the solution set of a linear system \(A\vec{x}=\vec{b}\) is the same as finding the set of all \(\vec{x}\) that \(A\) transforms into \(\vec{b}\). Theorem 1 in the previous section states that:

\(A(\vec{u}+ \vec{v}) = A \vec{u} + A \vec{v}\)

\(A(c\vec{u}) = c A\vec{u}\)

In general any function with these properties is called a linear transformation. More precisely, a function

is a linear transformation if it satisfies the following conditions:

These two conditions lead to the following useful fact:

\(T: \mathbb{R}^m \to \mathbb{R}^n\) is a linear transformation if and only if

\(T(\vec{0}) = \vec{0}\)

\(T(c\vec{u} + d\vec{v}) = cT(\vec{u}) + dT(\vec{v}) \quad \forall \vec{u}, \vec{v} \in \mathbb{R}^m \quad \text{and}\quad \forall c\in \mathbb{R}\)

The last property can also be generalized to \(n\) vectors:

This property states that \(T\) preserves linear combinations of vectors.

Example 1

Show that

\(T: \mathbb{R}^2 \rightarrow \mathbb{R}^3\) given by

is a linear transformation.

Solution:

T is linear as shown below:

Let’s write a Python function for \(T\):

import numpy as np

def T(V):

W = np.zeros((3, 1)) # return the zero vector in R^3

W[0, 0] = 2 * V[1, 0] # change the first component to 2*x_2

W[2, 0] = 3 * V[0, 0] # change the third component to 3*x_1

return W

Now, let’s plug in some vectors:

# input vectors

V = np.array([[1], [1]])

U = np.array([[0], [0]])

#output vectors

W1 = T(V)

W2 = T(U)

print('T(V): \n \n', W1)

print(10*'*')

print('\n T(U): \n\n', W2)

T(V):

[[2.]

[0.]

[3.]]

**********

T(U):

[[0.]

[0.]

[0.]]

Example 2

Determine if \(T: \mathbb{R}^2 \rightarrow \mathbb{R}^3\) given by

is a linear transformation.

Solution: T is not linear, since

\( T \left( \begin{bmatrix} 0 \\ 0 \end{bmatrix} \right) = \begin{bmatrix} 0 \\ 1 \\ 0 \end{bmatrix} \neq \begin{bmatrix} 0 \\ 0 \\ 0 \end{bmatrix} \)

Example 3

Let

Prove that the transformation

defined by \(T(\vec{x})= A\vec{x}\) is a linear transformation. \(T\) is called the projection onto the \(xy\)-plane.

Solution:

The transformation \(T\) is defined by a matrix multiplication. According to Theorem 1 in the previous section, the matrix product satisfies the linearity property. \(T\) is called a projection because when applied to

it doesn’t affect the first two components and replaces \(z\) with zero. Let’s verify this by evaluating it for

# matrix A

A = np.array([[1,0,0],[0,1,0],[0,0,0]])

# vector U

U = np.array([[1,3,5]]).T

print('T maps \n\n', U,'\n\n to \n\n', A @ U)

T maps

[[1]

[3]

[5]]

to

[[1]

[3]

[0]]

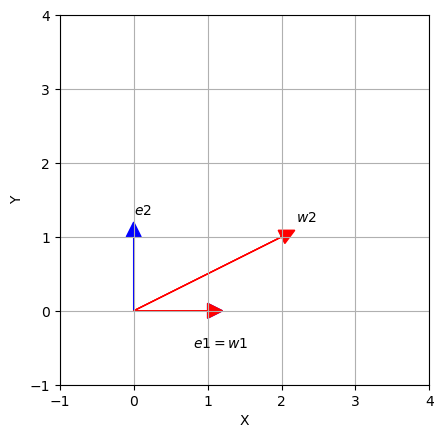

Example 4

Let

Then, the transformation

defined by \(T(\vec{x})= A\vec{x}\) is a linear transformation and is called a shear transformation.

For \(\lambda = 2\), we have:

# matrix A

A = np.array([[1,2],[0,1]])

# inpout data

e1 = np.array([1,0])

e2 = np.array([0,1])

#output

w1 = A @ e1

w2 = A @ e2

print('T maps \n\n', e1,'\n\n to \n\n', w1)

print(10*'*')

print('T maps \n\n', e2,'\n\n to \n\n', w2)

T maps

[1 0]

to

[1 0]

**********

T maps

[0 1]

to

[2 1]

Lets plot these vectors:

import matplotlib.pyplot as plt

ax = plt.axes()

ax.arrow(0, 0, 1, 0, head_width = 0.2,head_length = 0.2, fc ='b', ec ='b')

ax.arrow(0, 0, 1, 0, head_width = 0.2,head_length = 0.2, fc ='r', ec ='r')

ax.arrow(0, 0, 0, 1, head_width = 0.2,head_length = 0.2, fc ='b', ec ='b')

ax.arrow(0, 0, 2, 1, head_width = 0.2,head_length = 0.2, fc ='r', ec ='r')

ax.text(0.8,0 - 0.5,'$e1 = w1$')

ax.text(0,1.3,'$e2$')

ax.text(2.2,1.2,'$w2$')

ax.set_xticks(np.arange( -1, 5, step = 1))

ax.set_yticks(np.arange( -1, 5, step = 1))

ax.set_aspect('equal')

ax.set_xlabel("X")

ax.set_ylabel("Y")

plt.grid()

plt.show()

Observe that all points along the \(y\)-axis remain fixed while other points are shifted parallel to the \(y\)-axis by a distance proportional to their perpendicular distance from the \(x\)-axis; more specifically,

Shearing a plane figure does not change its area. The shear transformation can also be generalized to three dimensions:

Let

Then, the transformation

defined by \(T(\vec{x})= A\vec{x}\) is a shear transformation.

The standard matrix representation of a linear transformation#

As we have seen previously, any \(m \times n\) matrix \(A\) defines a linear transformation \(T: \mathbb{R}^n \rightarrow \mathbb{R}^m\) given by \(\vec{x} \mapsto A\vec{x}\). The converse of this statement is also true, as stated in the following theorem:

Theorem 16

Let \(T: \mathbb{R}^n \rightarrow \mathbb{R}^m\) be a linear transformation. There exists a unique \(m \times n\) matrix \(A\) such that \(T(\vec{x}) = A\vec{x}\) for all \(\vec{x} \in \mathbb{R}^n\). In fact, the \(i\)th column of matrix \(A\) is given by \(T(\vec{e_i})\), where \(\vec{e_i} \in \mathbb{R}^n\) represents the \(i\)th column of the identity matrix \(I_n\).

The matrix \(A\) is called the standard matrix representation of \(T\). The name is motivated by the fact that we are using the standard basis of \(\mathbb{R}^n\), \(\vec{e_i}\)s, to construct \(A\).

Example 5

Find the standard matrix representation of the linear transformation in Example 1.

Solution:

We apply \(T\) to the standard basis vectors \(\vec{e_1} = \begin{bmatrix} 1 \\ 0 \end{bmatrix}\) and \(\vec{e_2} = \begin{bmatrix} 0 \\ 1 \end{bmatrix}\) to obtain the columns of the matrix representation \(D\) of \(T\):

\(T(\vec{e_1}) = \begin{bmatrix} 2(0) \\ 0 \\ 3(1) \end{bmatrix} = \begin{bmatrix} 0 \\ 0 \\ 3 \end{bmatrix}\)

\(T(\vec{e_2}) = \begin{bmatrix} 2(1) \\ 0 \\ 3(0) \end{bmatrix} = \begin{bmatrix} 2 \\ 0 \\ 0 \end{bmatrix}\)

Thus, the standard matrix representation \(D\) of \(T\) is:

Let’s write Python code for this example and verify our solution:

def T(V):

W = np.zeros((3,1)) #returns the zero vector in R^3

W[0,0] = 2*V[1,0] #changes the first component to 2*x_1

W[2,0] = 3*V[0,0] #changes the third component to 3*x_2

return W

e1 = np.array([[1],[0]])

e2 = np.array([[0],[1]])

#the first column of A

c1 = T(e1)

#the second column of A

c2 = T(e2)

# formaing D

D = np.concatenate((c1,c2), axis =1)

D

array([[0., 2.],

[0., 0.],

[3., 0.]])

In Example 1, we saw that \(T(\begin{bmatrix} 1\\1\\ \end{bmatrix})= \begin{bmatrix} 2\\0\\3\\ \end{bmatrix}\). Let’s verify that \(D\begin{bmatrix} 1\\1\\ \end{bmatrix} = \begin{bmatrix} 2\\0\\3\\ \end{bmatrix}\):

v = np.array([[1],[1]])

D @ v

array([[2.],

[0.],

[3.]])

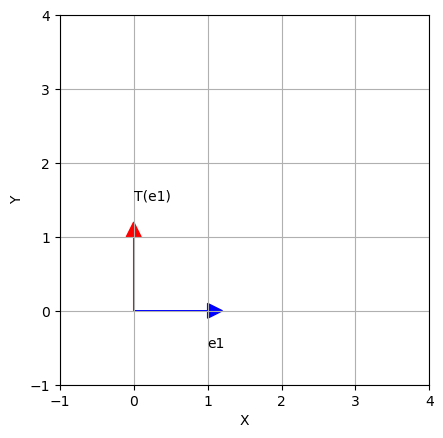

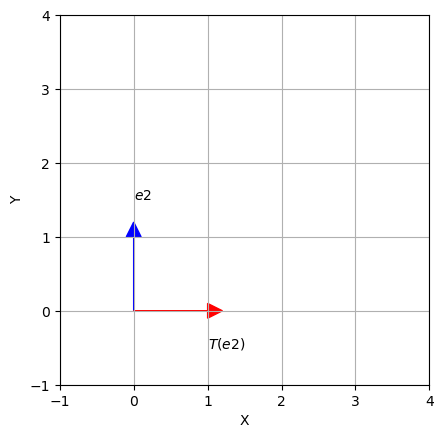

Example 6

Consider the linear transformation \(T: \mathbb{R}^2 \rightarrow \mathbb{R}^2\) defined as follows: \(T\left(\vec{x}\right)\) first reflects \(\vec{x}\) across the \(x\)-axis and then rotates it \(90^\circ\) counterclockwise. Find the standard matrix representation of \(T\).

Solution:

To find the standard matrix representation of \(T\) we apply \(T\) to the standard basis vectors of \(\mathbb{R}^2\):

\(T\) reflects \(\begin{bmatrix} 1 \\ 0 \end{bmatrix}\) to itself and then rotates it to \(\begin{bmatrix} 0 \\ 1 \end{bmatrix}\).

\(T\) reflects \(\begin{bmatrix} 0 \\ 1 \end{bmatrix}\) to \(\begin{bmatrix} 0 \\ -1 \end{bmatrix}\) and then rotates it to \(\begin{bmatrix} 1 \\ 0 \end{bmatrix}\).

Therefore, the standard matrix of \(T\) is

import matplotlib.pyplot as plt

ax = plt.axes()

ax.arrow(0, 0, 1, 0, head_width = 0.2,head_length = 0.2, fc ='b', ec ='b')

ax.arrow(0, 0, 0, 1, head_width = 0.2,head_length = 0.2, fc ='r', ec ='r')

ax.text(1,0 - 0.5,'e1')

ax.text(0,1 + 0.5,'T(e1)')

ax.set_xticks(np.arange( -1, 5, step = 1))

ax.set_yticks(np.arange( -1, 5, step = 1))

ax.set_aspect('equal')

ax.set_xlabel("X")

ax.set_ylabel("Y")

plt.grid()

plt.show()

ax = plt.axes()

ax.arrow(0, 0, 0, 1, head_width = 0.2,head_length = 0.2, fc ='b', ec ='b')

ax.arrow(0, 0, 1, 0, head_width = 0.2,head_length = 0.2, fc ='r', ec ='r')

ax.text(0,1 + 0.5,'$e2$')

ax.text(1,0 - 0.5,'$T(e2)$')

ax.set_xticks(np.arange( -1, 5, step = 1))

ax.set_yticks(np.arange( -1, 5, step = 1))

ax.set_aspect('equal')

ax.set_xlabel("X")

ax.set_ylabel("Y")

plt.grid()

plt.show()

Exercises#

Exercises

Determine if each transformation is linear

a. \(T: \mathbb{R}^2 \rightarrow \mathbb{R}^2\) given by

b. \(T: \mathbb{R}^2 \rightarrow \mathbb{R}^2\) given by

Find the standard matrix of \(T: \mathbb{R}^2 \rightarrow \mathbb{R}^2\) given by \(T(\vec{x}) =\) first reflect \(\vec{x}\) across the \(x\)-axis and then rotate \(45^\circ\) counterclockwise.

Suppose \(T: \mathbb{R}^2 \rightarrow \mathbb{R}^3\) is a linear map and

Find \(T \begin{bmatrix} 2 \\ 3 \end{bmatrix}. \)