10.3. K-means Clustering#

10.3.1. Clustering of Data#

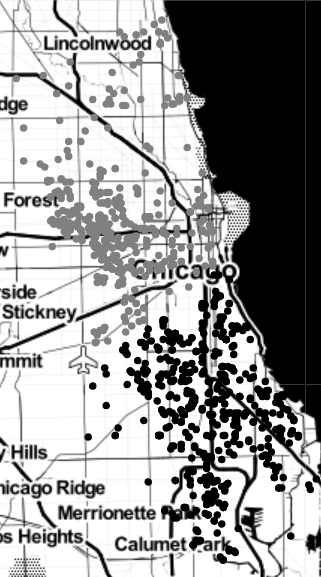

In this section, we will consider the problem of clustering into \(k\) groups a set of unlabelled points in a coordinate plane \(\mathbb{R}^2.\) For example, the figure below is a scatterplot of a data set from the Chicago Data Portal that shows the locations of 1,000 recent acts of violence in the city of Chicago. Police departments may use data like this to help identify clusters or “hot spots” in their violence reduction efforts. Another question is whether these crime locations might be naturally divided in some way, say, into two halves.

We can use \(k\)-means clustering to separate the mapped crime data as points in \(\mathbb{R}^2\) into an arbitrary number of groups solely based on location, as seen in the figure below. This figure shows \(k=2\) clusters. The I-55 Stevenson Expressway, which runs northeast from Stickney to downtown Chicago, is close to the boundary between the two clusters. High-density areas exist within each cluster, one in the West side and the other in much of the South side of the city. These areas reflect a long history of injustices in these parts of Chicago [Amdat 2021].

Data source: Chicago Data Portal https://data.cityofchicago.org/Public-Safety/Violence-Reduction-Victims-of-Homicides-and-Non-Fa/gumc-mgzr.

Data was extracted on December 7th, 2022. Map tiles by Stamen Design, under CC by 3.0. Data by © OpenStreetMap, under ODbL.

As discussed in the previous section on OLS linear regression, minimization of a loss function \(J(c_0,c_1)\) is the basis for finding a line \(y=c_0+c_1 x\) which best fits a collection of data points \((x_1,y_1), (x_2,y_2),\dots (x_n,y_n).\) We show how minimization of a different loss function is basic to a particular method to cluster data into \(k\) groups called k-means clustering. We begin by describing three recursive methods for locating a minimum point of a loss function, the first of which is gradient descent.

10.3.2. Optimization by Gradient Descent#

Recall that given a function \(J(w_1,w_2,\dots,w_s)\), the gradient of \(J\) (denoted \(\nabla J\)) is the vector

If \(J(w_1,.w_2,\dots,w_s)\) has a maximum or minimum at a differentiable interior point \(\mathbf{w}^*=(w_1^*,w_2^*,\dots,w_s^*)\), then

That is, all the partials of \(J\) must equal zero at \(\mathbf{w}^*.\) Hence, to find a max or min point \(\mathbf{w^*}\) of \(J\), we look for a solution to \(\nabla J=\mathbf{0}\).

Let us consider the one-dimensional case to find a minimum point \(w^*\) of a function of one variable \(J=J(w)\). Recall Newton’s method, which says that to find a root \(w^*\) of \(J(w)\), we can use the iterative algorithm

where the sequence \(w_n\rightarrow w^*\).

If we wish to find a root \(w^*\) of \(J'(w)\) rather than of \(J(w)\), Newton’s method becomes

where the sequence \(w_n\rightarrow w^*\). If \(\sigma\) is a positive constant near \(\frac{1}{J''(w^*)}>0\), then we can modify the last equation as

Generalizing to functions of more than one variable, we obtain the gradient descent recursive method to find a minimum point for \(J\):

where \(\sigma\) is a positive constant called the learning rate.

10.3.3. Example 3.1.#

Let \(J(w_1,w_2)=w_1^2+2w_1+w_2^2+4w_2,\) \(\mathbf{w}_0=(0,0)\) and \(\sigma=0.1\). Find \(\mathbf{w}_1\) and \(\mathbf{w}_2.\) To which point do we expect the gradient descent sequence \(\mathbf{w}_n\) to converge?

Solution:

We have \(\nabla J=(2w_1+2,2w_2+4).\)

Note that \(J=(w_1+1)^2+(w_2+2)^2-5\) has a minimum point at \(\mathbf{w}^*=(-1,-2)\). That point is where we would expect the gradient descent sequence \(\mathbf{w}_n\) to converge.

We continue with minimizing a loss function \(J(w_1,w_2,\dots,w_s)\) by introducing two additional methods of optimization.

Coordinate Descent#

In contrast to gradient descent, coordinate descent seeks to minimize the value of \(J\) one coordinate at a time. For example, to minimize \(J\), we begin with an initial point \(\mathbf{w}_0\) which specifies the values for \((w_1,w_2,\dots,w_s)\) and look for the minimum value of \(J\) obtainable by varying \(w_1\) and keep the remaining variables \(w_2,\dots,w_s\) fixed. After finding the value of \(w_1\) that gives a minimum \(J\), we continue by varying the value of \(w_2\) to further minimize \(J\) while keeping the newly obtained \(w_1\) and the other variables fixed. We continue this process until the value of \(J\) remains unchanged (or only changes at each step by an amount less than a specified threshold) when cycling through all \(n\) variables.

Optimization by Coordinate and Block Descent#

Example 3.2.#

Use coordinate descent to minimize

beginning with the initial point \(\mathbf{w}_0= (w_1,w_2)=(0,0)\).

Solution:

First we fix \(w_2=0\) and minimize \(J=(w_1-1)^2+4.\) The value \(w_1=1\) gives the minimum \(J\). Next, we fix \(w_1=1\) and minimize \(J=(w_2-2)^2.\) The value \(w_2=2\) gives the minimum. The result \(J=0\) is unchanged if we cycle through \(w_1\) and then \(w_2\). Hence, in this case, coordinate descent correctly gives \(\mathbf{w}=(1,2)\) as the point that minimizes \(J\).

Block Descent#

Block descent is similar to coordinate descent except we minimize \(J\) over blocks of variables rather than a single variable. The \(k\)-means clustering method is based on block descent, as we now explain.

K-MEANS CLUSTERING OPTIMIZATION PROBLEM#

Find \(\mathbf{z}_1,\dots,\mathbf{z}_k\) and \(y_{i,j}\) that

subject to the constraints \(y_{i,j}\in\{0,1\}\) for all \(i,j\), and \(\sum_{j=1}^k y_{i,j}=1\) (each data point is assigned to exactly one centroid).

In this case, the loss function \(J\) is the total squared distance between data points and their assigned centers (prototypes).

Two blocks of variables are used in block descent minimization of \(J\):

the prototype variables \(\mathbf{z}_1,\dots,\mathbf{z}_k\) and

the assignment variables \(y_{i,j}\) \((1\le i \le m\) and \(1\le j \le k\)).

Block descent in this case results in a two-step iterative algorithm:

step 1) Fix the prototype variables, and then specify \(y_{ij}\) so that each data point \(\mathbf{x}_i\) is assigned to the closest center \(\mathbf{z}_j\).

step 2) Fix each assignment \(y_{i,j}\) and choose the cluster center (prototype) to be the mean of the points in its cluster.

We continue this process until the value of \(J\) either is unchanged or only changes by some amount less than a specified threshold after completing the two steps in the algorithm.

Example 3.3.#

Use \(k\)-means clustering with \(k=2\) to divide the points \(\mathbf{x}_1=(0,0)\), \(\mathbf{x}_2=(10,0)\), \(\mathbf{x}_3=(10,1)\), and \(\mathbf{x}_4=(0,1)\) into two clusters. Begin with the prototypes \(z_1=(0,0)\) and \(z_2=(10,0)\).

Solution:

i) Fix the prototypes \(z_1=(0,0)\) and \(z_2=(10,0)\); we have \(y_{1,1}=1\), \(y_{1,2}=0\), \(y_{2,1}=0\), \(y_{2,2}=1\), \(y_{3,1}=0\), \(y_{3,2}=1\), \(y_{4,1}=1\), and \(y_{4,2}=0\);

ii) Fix each assignment \(y_{i,j}\); then the prototypes are \(\mathbf{z}_1=(0,0.5)\) and \(\mathbf{z}_2=(10,0.5)\).

iii)Fixing the prototypes \(\mathbf{z}_1=(0,.5)\), \(\mathbf{z}_2=(10,.5)\), the assignment variables are the same as before: \(y_{1,1}=1\), \(y_{1,2}=0\), \(y_{2,1}=0\), \(y_{2,2}=1\), \(y_{3,1}=0\), \(y_{3,2}=1\), \(y_{4,1}=1\), and y\(_{4,2}=0\).

iv) Fixing the assignment variables, the prototype variables are the same as before: \(\mathbf{z}_1=(0,0.5)\) and \(\mathbf{z}_2=(10,0.5)\).

Observing that \(y_{1,1}=1\) and \(y_{4,1}=1\), the points \(\mathbf{x_1}=(0,0)\) and \(\mathbf{x_4}=(0,1)\) are assigned to cluster 1. Furthermore, since \(y_{2,2}=1\) and \(y_{3,2}=1\), the points \(\mathbf{x_2}=(10,0)\) and \(\mathbf{x_3}=(10,1)\) are assigned to cluster 2.

Proof of Block Descent’s Stepwise Minimization of \(J\)#

Since \(J\) is the sum of squared distances between data points and their cluster centers, it is clear that if we fix the prototypes, \(J\) will be minimized by assigning each data point to the nearest prototype. We now show that for any fixed choice of assignment variables, the loss function \(J\) is minimized when each prototype is the mean of the points in its cluster.

Let \(J=\sum_{j=1}^k O_j\) where \(O_j=\sum_{i-1}^my_{i,j}\|\mathbf{x}_{i}-\mathbf{z}_j\|^2.\) Note that \(O_j\) can be computed using only the points in cluster \(j\), since otherwise \(y_{i,j}=0\). Denote these points \(\mathbf{u}_1,\dots,\mathbf{u}_{n_j}.\) Then for each \(j\), we minimize

The condition \(\nabla O_j(z_{j,1},\dots,z_{j,m})=(0,\dots,0)\) will be satisfied if we choose \(\mathbf{z}_j\) to be the average of the points \(\mathbf{u}_1,\dots,\mathbf{u}_{n_j}.\) For example,

so the first coordinate of \(z_j\) (namely, \(z_{j,1}\)) is the average of the first coordinates of the points in its cluster. The same argument shows that each coordinate of \(\mathbf{z}_j\) is the mean of the coordinates of points in its cluster.

10.3.4. Exercises#

Exercises

Compute \(\mathbf{w}_1\), \(\mathbf{w}_2\), and \(\mathbf{w}_3\) in the gradient descent sequence \(\mathbf{w}_n\) obtained when minimizing \(J(w_1,w_2)=w_1^2+2w_2^2\) with \(\mathbf{w}_0=(-5,-2)\) and learning rate \(\sigma=0.1.\) To what point will the sequence \(\mathbf{w}_n\) converge?

Use coordinate descent to minimize \(J(w_1,w_2,w_3)=w_1^2+(w_2-1)^2+(w_3-2)^2\) beginning with the initial point \((w_1,w_2,w_3)=(0,0,0)\).

Use \(2\)-means clustering to divide the points \(\mathbf{x}_1=(-1,-1)\), \(\mathbf{x}_2=(-1,0)\), \(\mathbf{x}_3=(-1,1)\), \(\mathbf{x}_4=(1,-1)\), \(\mathbf{x}_5=(1,0)\), and \(\mathbf{x}_6=(1,1)\) into two clusters. Begin with the prototypes \(z_1=(-1,0)\) and \(z_2=(1,0)\).

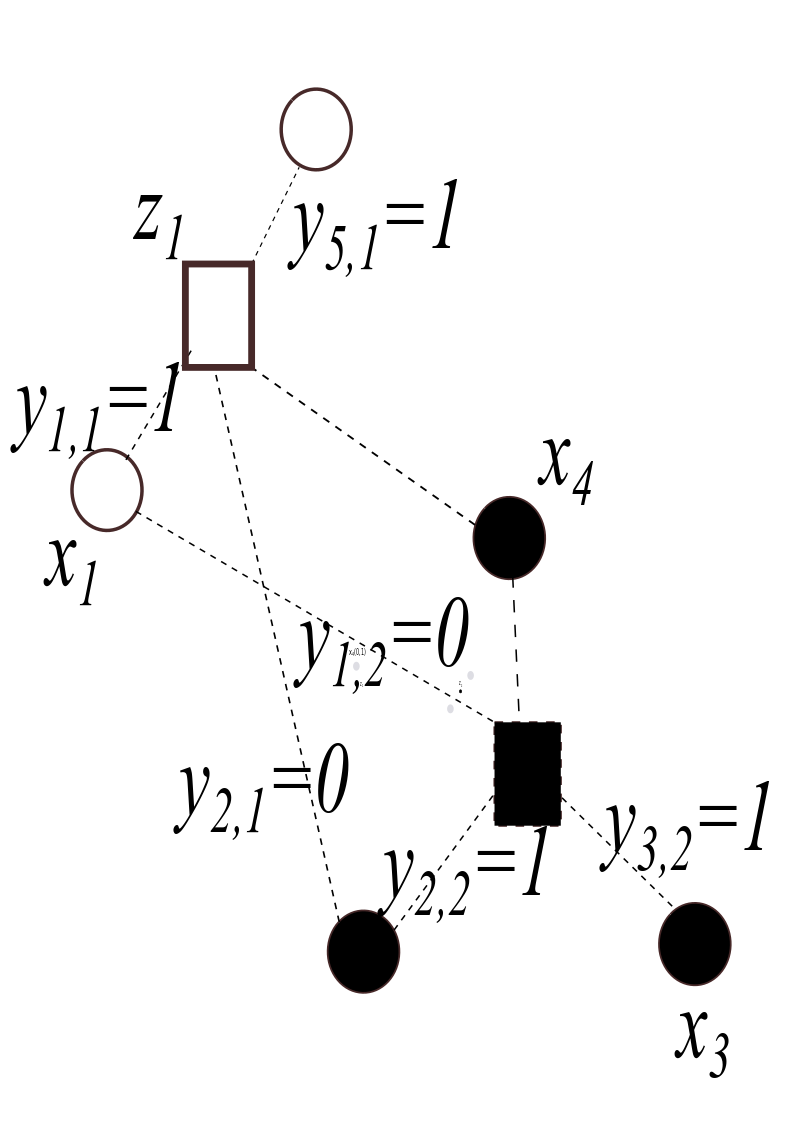

The graph in the figure below shows the positions of five data points \(\mathbf{x}_1, \dots,\mathbf{x}_5\), two cluster centers \(\mathbf{z}_1\) and \(\mathbf{z}_2\), as well as selected values for the assignment variables \(y_{i,j}\). Supply the missing labels for the data points \(\mathbf{x_2}\) and \(\mathbf{x_5}\), cluster center \(\mathbf{z_2}\), and assignment variables \(y_{4,1}\) and \(y_{4,2}\).